AWS Bedrock Embedder

What is the AWS Bedrock Embedder Node?

The AWS Bedrock Embedder Node turns text, like documents or paragraphs, into a numerical form called an embedding. This helps computers understand and use the text in smart ways, like finding related information quickly.

How to use it?

- Set Up AWS Permissions: Make sure your AWS account has

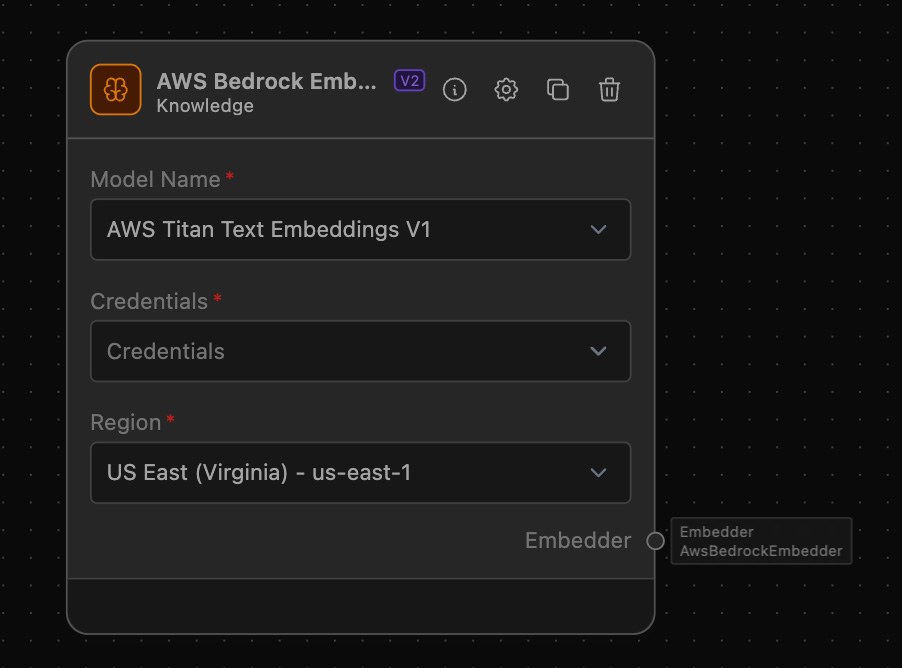

AmazonBedrock_FullAccessenabled. - Add the Node: Pull the AWS Bedrock Embedder Node into your workspace from the list of available nodes.

- Configure Credentials: Select the credentials with the required Permissions.

- Configure the Embedder: Select the model you want to use for the embedding.

- Setup the flow: Add additional nodes that require the AWS Bedrock Embedder as an input.

Required AWS IAM Roles and Permissions

To use this node, you need to set up specific permissions with AWS:

AmazonBedrock_FullAccess: This allows full access to Amazon Bedrock services, which is necessary for this node to work properly.

Supported Models

- AWS Titan Text Embeddings V1: AWS Titan Text Embeddings V1 is a foundational model designed for converting text into numerical vector representations (embeddings). These embeddings capture semantic meaning, allowing for applications in text retrieval, semantic similarity, clustering, and more. This model supports up to 8k tokens and generates embeddings in a 1,536-dimensional space. It is capable of handling various languages and is optimized for low-latency and cost-effective operations Amazon Web Services Docs | Amazon Titan Text Embeddings.

- AWS Titan Text Embeddings V2: AWS Titan Text Embeddings V2 is an advanced version of the V1 model with enhanced capabilities. It supports up to 8k tokens and offers flexible output vector dimensions (1024, 512, or 256), which can help optimize storage and performance. This model is particularly useful for applications like Retrieval-Augmented Generation (RAG), document search, and text classification. V2 is trained on over 100 languages Amazon Web Services Docs | Amazon Titan Text Embeddings.

- AWS Titan Multimodal Embeddings V1: The AWS Titan Multimodal Embeddings V1 model is designed to handle both text and image inputs. It generates embeddings that encapsulate the semantic meaning across different modalities (text and images) in the same space. This model is particularly useful for tasks like image search by text, image similarity, or combining text and image information for improved search and recommendation experiences. It supports up to 8,192 tokens of text and a max input image size of 5MB Amazon Web Services Docs | Titan Mutlimodal Embeddings G1.

- Cohere Embed English V3: Cohere's Embed English V3 model specializes in creating embeddings for English text. This model is optimized for tasks involving semantic search, clustering, and text classification. It is designed to generate high-quality, dense vector representations of text that capture intricate semantic details, making it suitable for advanced natural language processing tasks where nuanced understanding of the text is essential Cohere Command & Embed.

- Cohere Embed Multilingual V3: The Cohere Embed Multilingual V3 model extends the capabilities of the Embed English V3 to support multiple languages. It is designed to produce embeddings for text in various languages, making it highly valuable for multilingual applications. This model is optimized for performance in tasks such as cross-lingual search, translation alignment, and global content understanding. It aims to provide consistent semantic representations across different languages Cohere Command & Embed.

Example of usage

A good use for the AWS Bedrock Embedder is to help build smart FAQ systems. For example, you can feed it a long document about solar energy. It will convert the text into numbers that can be store in a vector store. Later, if someone asks a question like "What are the benefits of solar energy?", the system can find the answer quickly by comparing the question to the stored numbers.