OpenAI LLM

Integrate with OpenAI's API for advanced language model interactions

What is the OpenAI LLM?

The OpenAI LLM allows you to integrate with OpenAI's API using a provided API key to access various language models, including GPT-4 and GPT-3.5 Turbo. This LLM lets you configure the model name and temperature settings for tailored language processing outputs and optionally associate with an organization ID. The LLM generates outputs based on the selected language model, making it a versatile tool for natural language processing tasks.

How to use it?

-

Add the OpenAI Node to Your Workflow:

- Drag and drop the OpenAI Node from the node library into your workflow canvas.

-

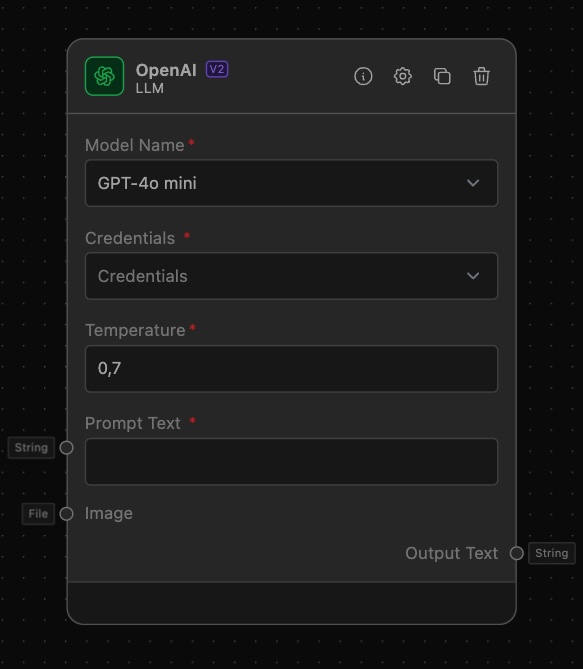

Configure the OpenAI Node:

- API Key: Enter your OpenAI API key in the

OpenAI API Keyfield. This is a mandatory field. You can obtain your API key from OpenAI's API documentation. - Model Name: Select the model you wish to use from the

Model Namedropdown. Options include:- GPT-4o

- GPT-4o-mini

- GPT-4 Turbo with Vision 128K

- GPT-4 8K

- Temperature: Set the temperature for the model. This parameter controls the creativity of the output. A lower value makes the output more focused and deterministic, while a higher value makes it more random. The default is 0.7.

- Credentials: Select an API Key which you can add in the Project Settings or in the Org Credentials tab.

- API Key: Enter your OpenAI API key in the

-

Connect Input Nodes:

- To provide input to the OpenAI Node, you need a node that supplies a text prompt. The

Text Input Nodeis ideal for this purpose.- Text Input Node Configuration:

- Template: Define a prompt template, e.g., "Create a list of ideas for my startup."

- Connect the Text Input Node to the OpenAI Node:

- Drag an edge from the output anchor of the Text Input Node to the input anchor of the OpenAI Node.

- Text Input Node Configuration:

- To provide input to the OpenAI Node, you need a node that supplies a text prompt. The

-

Connect Output Nodes:

- To handle the generated responses from the OpenAI Node, you need an output node such as the

Output Nodeto display the text.- Output Node Configuration:

- Node Type: Select

Textto display the generated text.

- Node Type: Select

- Connect the OpenAI Node to the Output Node:

- Drag an edge from the output anchor of the OpenAI Node to the input anchor of the Output Node.

- Output Node Configuration:

- To handle the generated responses from the OpenAI Node, you need an output node such as the

Supported Models

GPT-4o mini

- Description: The most cost-efficient small model, smarter and cheaper than GPT-3.5 Turbo, with vision capabilities.

- Context Size: 128K tokens

- Knowledge Cutoff: October 2023

- Strengths: Cost-efficient, vision-enabled.

GPT-4o

- Description: The most advanced multimodal model, faster and cheaper than GPT-4 Turbo, with superior vision capabilities.

- Context Size: 128K tokens

- Knowledge Cutoff: October 2023

- Strengths: Advanced, multimodal, strong vision abilities.

GPT-4 Turbo with Vision 128K

- Knowledge Cutoff: December 2023

- Context Size: 128K tokens

- Strengths: Fast, optimized for speed and cost-efficiency in comparison to standard GPT-4.

GPT-4 8K

- Description: A version of GPT-4 with an 8K token limit, suitable for tasks requiring smaller context windows.

- Knowledge Cutoff: September 2021

- Context Size: 8K tokens

- Strengths: Efficient for short tasks requiring high intelligence.

Example of Usage

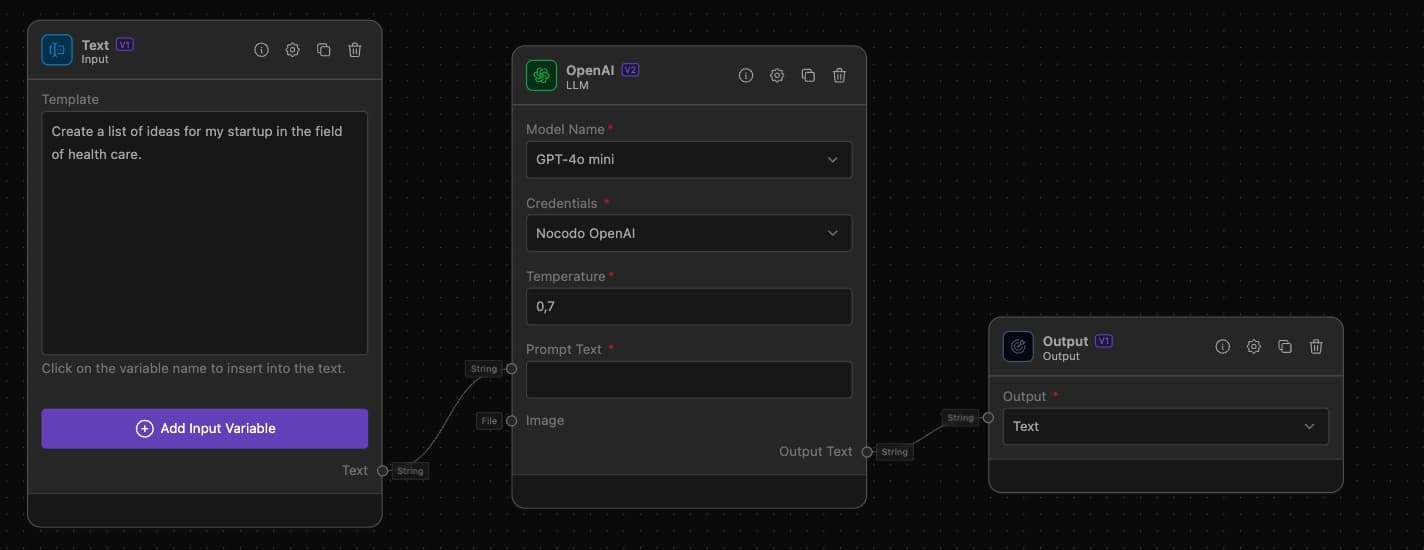

Example Task: Openai LLM with Text Input

Objective: Creating a Startup Ideas Generator

This setup allows users to enter an industry in the Text Input, prompting the OpenAI Node to generate a list of startup ideas based on the input, and displaying the result through the Output Node.

Step-by-Step Setup

-

Text Input Configuration:

- Drag the Text Input onto the canvas.

- Configure the

Templatefield with: "Create a list of ideas for my startup in the field of {industry}." - This node will allow users to input the industry field dynamically.

-

OpenAI LLM Configuration:

- Drag the OpenAI LLM onto the canvas.

- Enter your OpenAI API key in the

OpenAI API Keyfield. - Select

GPT-4 8Kfrom theModel Namedropdown. - Set the

Temperatureto 0.7 for balanced creativity.

-

Output Configuration:

- Drag the Output onto the canvas.

- Select

Textfor the output type to display the generated text.

-

Connecting the Workflow:

- Draw an edge from the output of the Text Input to the input of the OpenAI LLM.

- Draw an edge from the output of the OpenAI LLM to the input of the Output.

Tips for Effective Prompts

- Be Specific: Clear and specific prompts lead to better results. For example, instead of "Write a story," try "Write a short story about a brave knight who saves a village."

- Use Variables: Utilize variables in your prompt templates to dynamically adjust inputs. For instance, "{industry}" can be replaced with user-provided values.

- Adjust Temperature: Experiment with the temperature setting to balance creativity and coherence in the generated text. A setting of 0.7 is a good starting point for most use cases.

By following these detailed steps, you can effectively integrate and utilize the OpenAI Node within your workflows to leverage powerful language models for various tasks. For more information on OpenAI's API and capabilities, refer to the OpenAI API documentation.